Simple Algorithm to Play a Simple Game

Anyone who has worked with Machine Learning would have used the Logistic Regression algorithm, or at least heard about it. Well, here is a simple application that observes you play, and learns, how to play the game of Tic-Tac-Toe.

How does it work?

As you play the game the algorithm observes the game. It isolates the choices that you made which led you to win the game. The draw and losing cases are not used. It then uses Logistic Regression to train the underlying intelligence, let us represent it by “”. As it gains more experience, it’ll be able to make more accurate predictions about the game and hence it learns to play the game.

The math behind it

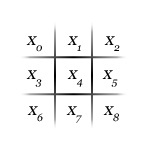

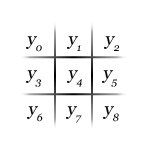

It uses Gradient Descent along with Logistic Sigmoid function to train and predict. Prediction in this application is done by nine individual Logistic Regression units, and the outcome of each of them corresponds to one of the nine possible spaces in the Tic-Tac-Toe game.

|

Layout of Inputs |

Layout of Outputs |

|

Figure 1 |

|

Let be the indexes of nine spaces in the game. Also let,

represent the space chosen by you (to mark either X or O). Let,

represent the input to the algorithm (see Figure 1). The intelligence that maps the input to a predicted output be represented by

. The prediction

for the

space is made as per the equations shown below:

Note: is the bias parameter. It has the same effect as applying Affine Transform to the

polynomial.

Here, represents the predicted output and

represents the move made by you. So, the purpose of training process is to adjust the values of

until

. This is done using the Gradient Descent algorithm.

| Learning Rate, a real valued number that indicates how fast the training algorithm converges. | |

| Number of training samples. | |

| Intelligence parameter indicating how the |

|

| Actual output for the |

|

| Predicted output for the |

|

| Represents the |

|

| Represents the value of the |

How does all that work?

Below is a Silverlight version of the application, try it out. After you play the first game, you should see some prediction. The darkest circle is where the prediction algorithm thinks that you will make the next move. Here is the link to the source code: TicTacToe.zip. Note, you have to move for both the players. The predictor only predicts the moves. Until you play the first game with a winning outcome the predictor will not be trained.